If there’s one thing we know for certain that AI can do, it’s play games. Before computers were even invented, there existed game-playing software. Not only can AI play games, they are extremely good at it, much better than us. In fact, games, these miniature worlds with precisely-specified features, are the quintessential domain for AI. Games are AI’s natural habitat. Following rules in pursuit of some arbitrary goal is what AIs are all about. To the degree that they can accomplish any activity — fold proteins, write poems, make pictures — they do so by turning that activity into a kind of game. It’s almost as if games have always had AI coiled up inside them, waiting to get out.

And yet, recently, I can’t shake the feeling that AI can’t play games. That no existing AI is capable of playing a game. Not Deep Blue, not AphaGo, not Stockfish or TDGammon, or Chinook. Where did this crazy idea come from, and what does it mean?

I blame Jim Rutt. It was his little game Network Wars, which I’ve been playing obsessively for over a year, that got me started on this. I wrote about this game before, and specifically about this topic: about how the game’s simple AI “opponents” are better understood as rules or features of the game, not players. But since then, I haven’t been able to get this idea out of my system, it has continued to grow, and now seems to me to be a kind of skeleton key for understanding AI in general. Let me see if I can give you a glimpse of what this idea feels like.

Is Bowser playing Super Mario Bros? No, of course not, what an absurd question. Super Mario is a single-player game, and Bowser is just an element within that game — a subroutine, a set of rules. It may sort of look like Bowser is your opponent, it may look like he and Mario are two contestants engaged in a contest, but this is just a wafer-thin representational veneer applied to the surface of the game. To ask if Bowser, or, for that matter, Mario, is playing a game is to make a fundamental conceptual error, like asking if Hamlet is acting. Are dice playing Monopoly? Is the net playing Tennis?

Ok, let’s try a harder one. Is Emperor Arcturus Mengsk playing StarCraft 2: Wings of Liberty? (Mengsk is the commander of the enemy forces the player opposes in some of the missions in the single-player campaign.) Intuitively, this seems like almost exactly the same question as before. There is no Mengsk. Like Bowser, he’s just a cartoon added to the game to give thematic “juice” to some of its rules and subroutines. Asking if Mengsk is playing StarCraft is like asking if gravity is playing Tetris.

Ok, now how about this one? What if, in StarCraft 2, the player chooses “Play vs AI” and then selects the “Medium Difficulty AI”. Is that AI playing StarCraft? Ok, now we’re talking. Intuitively, yes, of course it is!

Now what if I told you that StarCraft’s Medium AI is identical to Mengsk? What if it was the same exact code? (This is not, to my knowledge, the case, but for the sake of argument, imagine it was.) Do our intuitions change? And in which direction? Do we now think Mengsk is playing StarCraft? Or do we think the Medium AI is not? Or do we still think one is playing and the other isn’t? And if so, why? Is it simply a matter of framing? Of context and interface? At any given moment, the exact same thing is happening, You’re selecting your units and giving them commands, and the software is responding by selecting their units and giving them commands. If I didn’t specifically tell you which menu button you clicked to initiate this battle there would be no way to tell the difference. Is there really a difference?

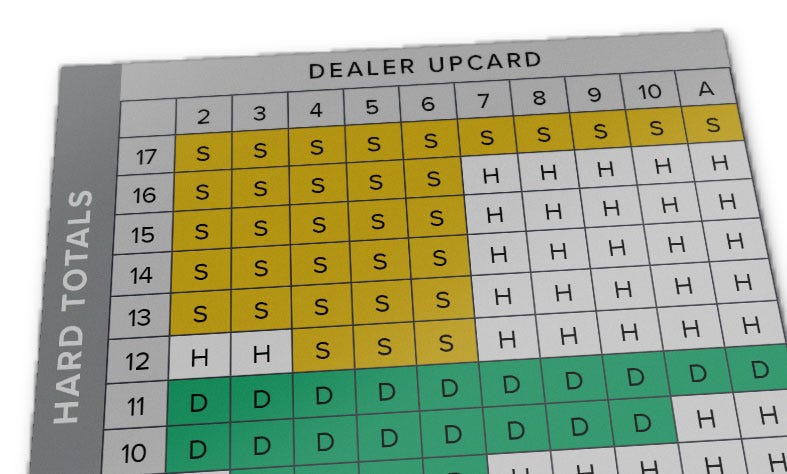

Let’s consider a different example. Take a card on which is printed instructions for the optimal way to play Blackjack. Is this card playing Blackjack? Obviously not. What about a person who is using this card and following these instructions, are they playing Blackjack? I would say yes, certainly they are. So what’s the difference?

Optimal Blackjack Instructions are a strategy within the game of Blackjack, a complete description of which moves to make under which conditions. It doesn’t make sense to say that a strategy plays a game. Players use strategies to play a game. They pick strategies, they develop strategies, they apply strategies, they follow strategies. But strategies themselves don’t play games, that much seems pretty straightforward.

What about a Blackjack dealer? Is she playing Blackjack? Intuitively, I would say no, she seems a lot like Bowser, more like a mechanism within the game than a player. But isn’t the dealer executing a strategy, just like the player using the Blackjack strategy card? Not really, no. If the dealer deviated from her pre-determined strategy, if, for example, due to a lapse of attention or a capricious whim, she neglected to stand on 17, the actual players could stop the game, point out the error, claim that the game had broken and demand a re-set. Just as if the wind blew down the net in a game of Tennis. The human dealer is really just a method for implementing the rules of the game. The rules are arranged to make the dealer look like a player - they “have” cards, they “take” actions, but really there’s no player there, just rules. Just like Bowser, Mengsk, and the AIs in Network Wars.

What about the casino? Is the casino playing Blackjack? You aren’t really playing against the dealer, but are you playing against the house? Huh. Yeah, strangely enough, it kinda seems like the casino is playing Blackjack to me. Not the building, obviously, but the… the corporate entity I guess. How could that be? How could this arrangement of people and rules and norms and laws and equipment and software and whatever else comprises a casino play a game? Well, for one thing, unlike dealers, nets, and Bowsers, the casino chooses to play. The casino voluntarily offers the game to the other players, and can quit playing when it wants to. Also, the casino picks a strategy — what stakes to accept, when to shuffle, how many decks to put in a shoe. And these things work together. The casino is aware that it is playing a game. It sees the game. Decides to enter into. Notices the results. Can change its strategy.

The Blackjack strategy card sort of does some of these things. It can, in a crude sense, “see the difference” between the dealer showing a 6 or a 7, and “choose” whether to stand or hit. But, even if you grant this generous animism, the card can never “see” the game. In order to see a thing you have to be able to pick it out from the background. You have to be able to distinguish between it and other things. The card can (sort of) distinguish between 6s and 7s and between Ss and Hs. And that allows it, in a sense, to “move through” the world of Blackjack. So let’s be wildly imaginative and picture this thing as a tiny creature skittering through Blackjack space. Even then, it could never distinguish between Blackjack and Gin Rummy. How could it? Even our crazy animist fantasy version of this thing is fully contained inside of Blackjack. How could it ever see it?1 Maybe you could make one that did. You’d need a very large card.

Let’s consider some other test cases. What about a little kid who barely understands the rules? Are they playing a game? I would say yes. In fact, I would say a little kid who barely understands the rules is, in some ways, the quintessential game player. (Sometimes the best player in the world at a game doesn’t know the rules.) What about animals? Is a dog playing fetch playing the game of Fetch? Again, I would say yes.

It’s also possible to think of lots of ambiguous cases, grey areas where something is sort of a game, or where someone is sort of playing. Is the Michael Douglas character playing the game in David Fincher’s The Game? If you force someone to play a game against their will are they playing? What counts as sufficient force? Whining? Cajoling? We’ve all been at card parties where someone who doesn’t like Bridge was strong-armed into being a reluctant fourth,2 were they playing the game?

So we have some examples of things that seem sort of like they might be playing a game but which, on closer examination, are clearly not. They are rules, mechanics, features, strategies. In contrast, we can think of examples of things that clearly are playing games - little kids, animals, casinos. As well as border cases where it’s just not clear.

So where does AlphaGo fit in this menagerie? Moreover, why does any of this matter? Is this just semantics? Just definitional nitpicking? Or, worse yet, is it AI-skeptic goalpost moving? One of those situations where, as soon as AI does a thing, we decide it’s not really a thing, or the AI is not really doing it, or not doing it in the way we do it, and therefore it doesn’t really count. One of my game designer friends, when I told him my suspicion that AlphaGo wasn’t playing Go any more than Bowser was playing Super Mario World responded:

or any more than an airplane is actually flying, given that it doesn’t flap its wings. Deep Blue was an amazing and brilliant Chess player, full stop. Because we only have one way to actually measure the brilliance of chess players.

But I think it does matter. And here’s why. Because AlphaGo is broken. I, a mediocre amateur Go player, can beat AlphaGo. OK, well, technically, I can’t even actually play against AlphaGo, but I can beat KataGo, as demonstrated by the brilliant work of Tony Wang et al in Adversarial Policies Beat Superhuman Go AIs which I’ve written about before. KataGo, like all the other top Go AIs, is based on AlphaZero, AlphaGo’s superior successor, and they’re all broken in this way.

And the way modern Go AI is broken is fundamentally related to this exact issue. The reason that you and I can beat superhuman Go AIs with a stupid trick is that they are stuck inside the game. They can’t see the game. They can’t see that they are losing, over and over again, to a moron. Because, unlike my 3-year old granddaughter, and my cat Duke (RIP), and Michael Douglas, and The Golden Nugget, they have no concept of what a game is, what winning and losing is. They are a complete and thorough game-playing process, a complete set of instructions for how to play, for which moves to make under which conditions. But this just makes them a very complex strategy, able to see moves with superhuman acuity, but utterly incapable of seeing the game itself.

Furthermore, this is a problem that’s easy to fix. The KataGo team has probably already patched, or is in the process of patching, the code so that the specific dumb trick of this particular adversarial strategy no longer works against it. And that’s the fix. No, not the patch, but the overall process of Team KataGo noticing that the AI is losing, and caring that it’s losing, and deciding to do something different. This is what playing a game looks like!

So that’s what makes this philosophical puzzle more than just a curiosity. Because it’s an engineering problem. Making AI systems robust to adversarial policies is a difficult and important problem. A problem whose solution may well require figuring out how to make AI systems capable of seeing the game they are playing, of noticing, caring, and deciding. And it’s not just AI safety. The reason AI sucks at poetry is also fundamentally related to this same problem. It’s my view that a version of this problem is the reason modern AI, despite being amazing at so many tasks, also sort of sucks at all of them.

I’m not saying that making an AI that can play a game means solving the hard problem of consciousness. But I’m not exactly not saying that either. Maybe consciousness was just evolution’s way of solving the engineering problem of how to prevent intelligence from getting stuck inside of games. I don’t know. But I do know that, like it or not, doing practical AI engineering in 2024 means wrestling with some thorny philosophical puzzles.3

So, there you have it. That’s my theory of why current AI can’t play games, and how it could. I’m not like John Searle, making some grand impossibility claim. I think it’s a tractable problem, but I do think it means making some fundamental changes to our approach.

And, unlike my friend, I think we have more than one way to measure the brilliance of Chess players. For example, Magnus Carlsen, who is not only the greatest living Chess player, but quite possibly the greatest to have ever lived, is bored of Chess. “I find classical Chess stressful and boring.”

That’s what it looks like to not be trapped inside of Chess. That’s how to play a game.

Wait, can you not see things you are fully embedded in? Aren’t we fully-embedded in the universe? Does that mean we can’t see the universe? What does it mean to be fully-embedded in a thing anyway? Quick, someone explain Heidegger to me.

Yeah, I know that literally none of us have been in this situation.

I like it.

Very much agree with BCS.

> solution may well require figuring out how to make AI systems capable of seeing the game they are playing, of noticing, caring, and deciding.

Brian Cantwell Smith argues for this in _The Promise of Artificial Intelligence: Reckoning and Judgment _ (2019). That this is what it would take to (and not that this is impossible).